👋 Hi, I'm Yan Li.

I build humanoid robots that learn to move, plan, and assist humans through reinforcement learning. My work bridges simulation and real-world deployment via 3D printed humanoids, control systems, and machine learning pipelines.

Humanoid Robot Projects

-

Humanoid Robot Locomotion Projects

This research focuses on humanoid robot locomotion using machine learning methods, particularly in the areas of reinforcement learning and imitation learning. The goal is to develop robust locomotion policies in simulation and then transfer them to a custom-built, 3D-printed humanoid robot. This pipeline bridges the gap between simulated learning and real-world application, enabling scalable and adaptable robot control.

Humanoid Robot Simulation Mujoco Goal Conditioned Reinforcement Learning Humanoid Robot Locomotion Reinforcement Learning PPO SAC GAIL

Precision AI Forestry

-

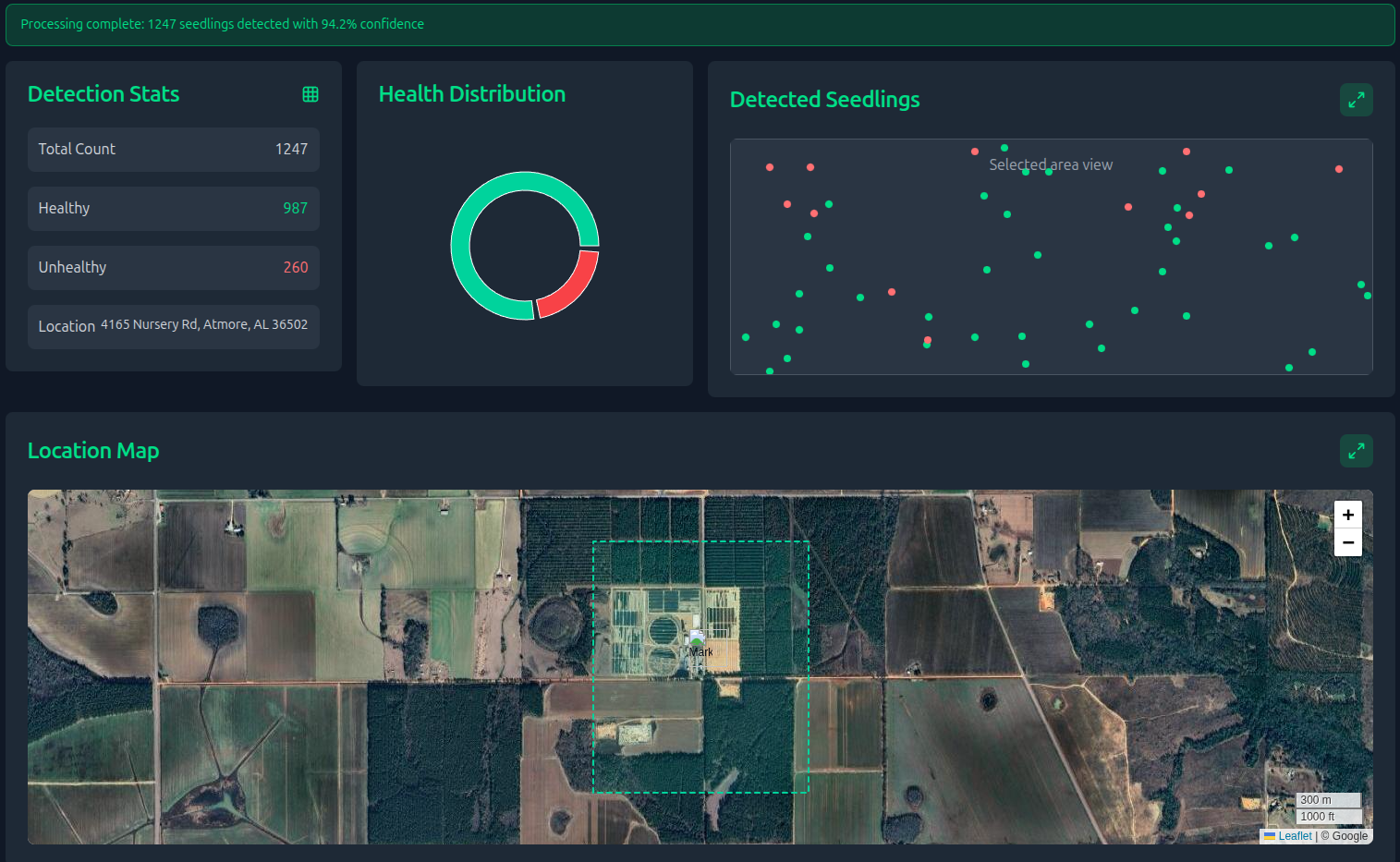

AI-Driven Insights for Precision Forest Health and Resource Assessment

We leverage vision-language models to automate labor-intensive tasks traditionally performed by humans. Our objective is to deploy computational solutions for forest surveillance, nursery monitoring, and disease control applications.

Computer Vision LLM Vision Language Model Llama-3-8B CLIP-L\14

Assistant Robot

-

Assistance Robot for Dementia Care

This project served as a bridge between my algorithmic work and real-world robot locomotion control. By integrating with the ROS protocol, it enabled reliable human tracking and medication recognition, allowing the robot to perform assistive tasks in dynamic environments.

Human Tracking Locomotion Object Detection SLAM CLIP-l/14 Llama-2-7B SLAM ROS -

Augmented Reality powered construction feild Lofting tool

This project aims to automate layout measurement in large-scale construction sites using a portable lofting device. Replacing traditional manual methods, the system combines laser projection with visual-depth sensing to accurately execute layout tasks. By interpreting building schematics in real time, it significantly reduces human error and improves efficiency—cutting layout time by up to 80%.

Real-scale Augmented Reality ILDA Files Prototyped Device

Few Shot Robot Task Learning

-

A Keyframe-Driven Framework for Robotic Manipulation Learning

Empower non-robotics experts to train robots for tasks within their own domains through intuitive interfaces and adaptable learning frameworks.

Latent Space (VAE) for RGBD Data Robot Manipulation Task Trajectory Planning Reinforcement Learning (SAC, GAIL) VAE Hierachical Reinforcment Learning

L2 Autonomous Driving

-

L2 Autonomous Driving Project

This project was developed on top of the open-source autonomous driving framework Autoware. Given the limitations in computing resources and manpower, our research primarily focused on vehicle detection, lane detection, and instance segmentation. Additional efforts included LiDAR data processing, sensor calibration, as well as the development and modification of on-vehicle computing hardware and devices.

Instance Segmentation Pixel Level Relationship Matrix Instance Distinguish Method Bottom-up Algorithm